3D Scanning (Photogrammetry) With a Rotating Platform - Not a Rotating Camera!

by Downunder35m in Workshop > 3D Printing

32604 Views, 107 Favorites, 0 Comments

3D Scanning (Photogrammetry) With a Rotating Platform - Not a Rotating Camera!

Update 25/11/2018: Some added tips and tricks in the last step

Update 20/05/2015: Added another step for doing the entire 3D using only freeware.

Update 17/05/2015: Added some pics and the scan of a micro switch.

Also fixed the permissions in Sketchfab to allow the download of the models used in this Instructable.

Intro...

3D printing, for most of us, also comes with the need to design and create your own models to print.

In many cases you will use dedicated software like Blender to create your masterpiece but there are those other cases where you want to "copy" something.

Some of the high end printers already come with a laser scanning option but I will show you how to it with very good results using software and some basic setup.

There are quite a few Instructables showing more or less complicated constructions to move a camera around an object - as this is the requirement for cloud based 3D modelling.

Especially small objects or very complicated structures are just just easier to photgraph with a fixed cam than by moving the cam around.

Once you get into the size that requires you to take macro images with your cam you will realise a moving cam is not always the best solution-

In this Instructable I will try to give some general info and tipps on how to use a rotating platform to scan the parts you want to print.

Unlike the cloud based services like 123D Catch and similar we can actually use a fixed cam position for this.

But in order to be successful we also need some software and of course some manual labour.

I won't go into all the details on how to clean up the model and prepare it for printing as this is stuff for an entire Instructable on its own.

If you are into 3D printing you will sooner or later get used to cleaning and preparing models for your printer.

What I will do is show you a way to rotate your piece instead of rotating the camera around your object to get a good quality 3D model.

The models can be used for 3D apps, gaming but with a bit of preparation for a print as well.

The model of the pot is included for download but if you want to print it you will need to clean it up a bit more.

My aim here was not to print or present a creation but to show you how to scan basically anything.

I will use a "3D approach" to the 3D scanning, meaning shots in different angles in the x, y and z axis will be made to capture all aspects of an object, be prepared for long sessions...

The good thing about this method is that you get a model from all angles including the bottom.

You might notice throughout this Instructable that I repeat myself a few times, I did that on purpose ;)

The result from "scanning" a Copper Sulphate crystal using photogrammetry, if you want to know how it was done check the following steps:

Crystal by Downunder35m on Sketchfab

And the second example from this Instructable showing the problems with hollow objects:

Pot by Downunder35m on Sketchfab

Here is the final model of a micro switch I scanned the same way as the crystal, with a lot of cam rotations.

Switch by Downunder35m on Sketchfab

The Different Scanning Methods Available to Home Users....

Laser and ligh patterns...

The most popular and well known solution IMHO is the David Laserscanner.

Not only can it use a cheap line laser that is hand operated (no recommended though) but also a projector for much faster and more accurate scans.

The big downside is the limitation for the camera as usually a webcam is used.

This means the resulting images can be only as good as the cam in use.

Similar for the laser: A cheap line laser with a quite thick line and stray light around it is hard to calibrate and creates modelswith a relative low surface resolution for details.

Another problem is the color of the object, in many cases dusting is required as black or the color of laser in in use are hard to impossible to detect.

There are other solutions available, some up into the professional range as well as a Instructables on how to make your own laser scanner.

Free and cloud based....

On the other hand we have cloud based solutions, like the well known 123D Catch by Autodesk.

Their main aim is modelling bigger objects and outdoor scenes, like entire buildings or cars.

Big plus is that you don't need expensive hardware and the software is free to use.

The plus is also the negative as uploading a few hundred pictures can be a painful experience without a fast internet connection.

Also you have to be aware that every model created is no longer your property.

Downside of all free services I tested so far is they all require to have a good background around your object.

This forces you to move the cam around the object and to provide a background with enough information to create a depth map that includes the object.

Photogrammetry....

Although not really new, it is mostly unknown to the 3D printer community.

Unlike the other methods here only the information within an image is used to create a model.

The technique is basically identical to what 123D Catch uses but with the difference that with software solutions there is no limit for the quality or number of images.

Downside is the hardware requirements.

With high res images and great numbers of them you need a lot of RAM and CPU cores to render a model in reasonable time.

On the other hand the user has the best options in terms of creating a high quality copy in 3D.

Some freeware exists but the majority is paid software that can be a bit on the expensive side.

But if you do more than just hobby printing it might be a vital alternative.

Direct 3D capture....

Some phones and tablets now come with multiple cameras and depth sensors to create 3D images like you would take a normal picture.

So far no software makes use of the features and possibilites.

But with more widespread cameras to do this this might change soon.

The X-Box Kinect was also modded to be used as a direct 3D scanning system.

Quality is quite low but with new model might fix that once the sources are available to the modding community.

The Basics of Taking Proper Images

Unlike pics you want for your family album, photogrammetry has special needs that you have to know for getting good results.

If we take the standard for many people, a laser scanning platform, I can point you to the main differences between a normal photo, an image for laser scanning and an image for photogrammetry.

In a normal pic you want to highlight what is important and even play with the focus and exposure so just the detail you like is clear in the resulting pic.

A laser scanner basically uses a video feed in B/W to check the shape of the laser line and takes the reference information from markes on the background - they are also used to get the correct scale.

On the other side, photogrammetry really needs all the pixels you can provide and in the best possible quality!

Light...

You don't want to use a flash!

A flash causes highlights and reflections that can make it impossible to create a proper picture used for a 3D model.

Same for sunlight and other direct light sources.

The problem here is that the same area of an object will appear in very different brightness levels, this makes it very hard to find the correct parameters.

Also a quality texture (if required) should be done with a seperate set of images - I might create another Instructable covering accurate 3D models with accurate textures if the feedback is accordingly.

In an ideal world you would have a light tent or light box so there are no shadows or highlights anywhere in your image.

But there are ways to compensate, which is good for the beginner and those just curious about the topic.

So if you can take the time to create a scanning setup that allows you work with the light in the right way, sometimes some white plastic bags over a light is enough to remove unwanted highlights ;)

I used the worst possible scenario for this tutorial: A LED light bar ,no real background cover like a green screen and a cam with only 8MP.

Focus and exposure...

Even if your part is quite small you want to use an apperture setting that is quite high, f12 and higher is recommended, I prefer f22 to get all areas of an object clear and sharp even if they are a bit further away from the lens...

All areas of your part and the background should be clear and sharp as the software needs the information in your pixels to find reference points in all images and to create the depth information.

The background quality is mainly important to have it easy creating a mask for the images, but as you can see if it has to be you can do without.

Unless you have semi-professional light setup you will need to use quite long exposure times and a tripod.

Only try free hand shots if the the shutter speed is high enough to allow for an image that is clear and sharp.

Using a delay on the shutter makes sure you won't get a blurry pic from the movement of pressing the button on your cam.

Steady and sharp is the aim here.

Cams to use....

The opinions here differ quite a bit depending on what tuturial you look at.

But since we want to produce quality a good cam with a high resolution is recommended.

8MP DSLR with a 50mm lens is a good start, 14MP with a set of different lenses better and a makro lens is better ;)

A smartphone can be used too but it needs to be capable of producing consistant results, which is often not possible due to the limitations of the hardware and app in question - but don't let this stop you from testing it with your phone!

Webcam are IMHO useless for the job as they produce too much noise in the image. Although proper ones, like some Logitech models can be set up with fixed exposures and no automatic corrections - the limit is the image quality and resolution.

The more real pixel you have in an image the easier it is for the software to create a 3D model.

Biggest downside of webcam is that you can not save a loss free image.

Image formats...

We want all the image information without alterations!

So the best is using RAW and to create TIFF images from there - I won't go into the details but just say if you need a perfect texture it often helps to work with two sets of images. One for the 3D in high contrast levels, one for the texture optimised in contrast brightness and so on.

Tiff or PNG images can be saved without compression and we shall use this feature as every compression removes information from an image - information that the software might need to create a better 3D model.

Finding the right cam settings...

As said, we want as much detail as possible without ruining any part of the image - and we want to keep the setting consistant.

Waste some time by taking pics with different camera settings and check them on the big screen by zooming into all parts of the image - use the setting that gives you the best overall result.

If you know how to take perfect portrait shots of a person try to forget that knowledge for the time you create shots for 3D images ;)

In many cases you can set the focus back to manual and lock it for the entire set of images.

The object itself....

As long as your object is neither shiny (reflective) nor tranparent you should be fine.

Best results are possible if the object has a surface with lots of color variations as this helps to find corresponding pixels to align the images.

For example a plain white box will be quite hard to scan this way, putting some color of stickers on it makes it much easier.

Transparent objects can be dusted with cornflour or painted with a water dissolvable and removable paint.

A surface that is pretty much featureless, like the flower pot is no problem to scan, the crystal however is a different story as there are many different angles and hidden surfaces.

The rotating platform...

Here it really does not matter what you use, I used the mount for a laser level.

A lazy susan, rotating TV stand, or for the fany guys and girls a motorised platform will work.

Just make sure the surface is pretty much featureless and even in color, if in doubt place injet printable DVD or sheet of paper over the platform and secure it.

Ensure the cover is round and centered so the masking works easier.

The Hardware....

I use an Olympus E-300 with a 15-45mm lens, resulting in 8MP images.

It is quite outdated for the job but still much better than a 12MP cam in a phone that can't be set to work with fixed settings ;)

Also the 8MP is already a bit over the limit of my PC hardware, which results in some hours of my PC calculating LOL

For starters a system with a quad core CPU, CUDA capable graphics card (basically anything that is good for gaming) and 8GB of RAM is the minimum, less than that and you really need to invest some time to calculate your 3D model.

Using a lot of images and even more information to create a point cloud means higher specs for your hardware will save you a lot of time - sometimes days....

A good system should have: 32GB of RAM, 4-5GHz 8-core CPU, 2 gaming graphics cards and a SSD drive in case the RAM runs out.

For image numbers above 200 you should really think about getting 128GB of Ram as otherwise the processing speed will suffer, using a dedicated server motherboard with several CPU's also helps.

The rotating platform can be anything from a dinner plate spinner, over a lazy susan to a dedicated motorised platform. Whatever fits your budget is good.

Only make sure it has a degree scale on it, if not make one yourself by marking the rim of the platform.

Here is an image of my little one from a laser level:

On top is a DVD with some white paper.

For the lights is is really best to have a proper setup, check other Instructables or Google on how create a good light box or light tent for your needs if required.

In some cases it can be good to have light from underneath the object as well, although a ring of lights at two different height levels should be enough for most tasks.

For my scans I used a LED light bar, the kind that replaces flouroscent tubes.

Don't get scared by hardware "requirements", I have an old quad core system with a GTX-460 graphics card and 8GB of RAM.

Calculating the dense cloud and high quality mesh was an over night job both times with the mesh taking almost 16 hours to complete.

Prices for godd RAM are at an all time high, but if you think of investing into a new PC anyway seek option to have it upgraded to 32GB of RAM if you can afford it.

The Software....

I already said cloud services are not really an option at the time of writing, but I might have missed some that do work for this.

I use Agisoft Photscan for this tutorial, but of course any photogrammetry software capable of masking will do the job here, there is freeware as well and Visual FSM would be the top choice in this sector.

ArcheOS is a dedicated and stand alone Linux system dedicated for the task if you prefer to have solution that you can use outside your Windows enviroment or if you are already a Linux user.

Zephyr Pro from 3DFLOW is another top software but maybe a bit out of reach for the hobby user, it has some nice features like creating your model from a video instead of single images and a very easy to use masking tool.

What you use is entirely up to you and your budget but all things I do in this Instructable should be possible with the above mentioned programs, you might have to adjust to their differences though.

Preparations....

You want to setup your workspace so there is no chance of accidentially moving the cam or object as we will take quite a lot of images.

On your PC you might want to create some work folders for your project, one for every round of images at the same angle and height.

Make sure your batteriers are fully charged, the last thing you want is running out of juice during a shoot and to start again if you can't match exactly the conditions of the last image before the battery died.

If in doubt have a spare battery at hand ;)

Do a last light check, make sure you don't get reflections on your object that might over expose a part of the image!

A word on the objects itself:

As we need as much real detail as possible we don't want any shiny or translucent objects!

For shiny and translucent objects you want to work with two sets of images:

One for the texture and one with the object dusted or painted (even a white bord marker can help here) to create the actual 3D model.

You can "cheat" here if required and should always keep in mind that for the purpose of 3D printing you don't really need a texture, all you need is a proper surface mesh!

Tiny parts like connection pins on circuit boards, hair or things like spokes on a bike can be insanely tricky to impossible to re-create, please take this into consideration when trying to scan your objects.

The same is true for free standing parts of an object, like legs on a chair or table, those things often require a lot of detail shots of the area again from different angles.

Basically you have to make sure that every tiny detail of your object is clearly visible in at least three seperate camera shots, if in doubt use more.

Number of Images and How to Take Them....

What you might require depends on the object itself, the quality you require from your model and what your PC (or your patience) is capable of.

The usual tutorials on the net use quite simple objects like a colorful vase or figurine.

With around 20 shots each in two different heights you will get usable results fast.

However, if you require a true 3D model from all sides (including the botton where your objects stand on) you will need more shots, same if your main aim is accuracy.

In terms of accuracy most good programs allow you to scale the object by reference.

This mean you can place a marker, like box with 1cm sides, on your object.

The marker(or similar ones) need to be visible on at least three shots for every round of images.

Take a featureless, round cylinder as an example:

You need the height, diameter and that's about it, so a hand full of images and some minor corrections will result in a usable 3D model (although creating this by software might be faster and easier ;) ).

If you take a chair instead it means you have a lot of areas that your object will have invisible as other parts of it cover it.

So you need additional shots from the underside, where the legs join and so on.

There are good tutorials out there that can show you what to look for but if you can imagine that the software can't create what is invisible on the images you will figure out quickly where you need more images once you see distorted areas or holes in your 3D model.

In such cases it pays of to have more pics taken than actually used for the model.

For the crystal I used 216 images initially but a few did not make it into the final rendering as my PC is on the lowest specs for this task.

As a basic rule you should get every surface from at least 3 different camera angles, this means you should check the images on the way so you can find out where you need more pics or an entirely different placement of the object (like to get the underside).

The more structural details on your object, the more images you will need, if the detail is limited to paintwork on your object you only need more if you want to capture the texture properly.

For the crystal I started with a row of images at the same height as the crystal, followed by another row with the cam a bit higher.

To get the underside the crystal was rotated on the platform and another row of images taken.

All images in 5 degree steps to get the most details as the crystal is a quite complex structure.

In the resulting camera model it looks like the cam was actually moved around the object.

Just to be clear here:

You start with the object in one position on your rotating platform and take the shots.

Depending on the level of detail required in 5, 10 or 20° increments, I do not recommend taking less images, like every 30° unless the object is really simple in structure.

Now you check the images on the big screen so you can find out if there is anything obstructed requiring additional shots.

I had to do this quite a bit for the crystal as well as for the micro switch.

Note the areas you need more details of and change the placement of the object so you can cover the previously missed areas as well - take a few more shots here, like in 5° steps instead of 10° steps.

At this stage you might have between 50 and 90 pics and it is time to create the first model.

Align the images for each chunk and if all is good merge them into a new chunk.

This merged chunk is now used to create a first model.

You should clean out unwanted outside pixels in the point cloud as well as the dense point cloud before rendering the mesh.

After the rendering you will see if there are areas that need more attention.

For example areas that lack all detail, areas that have unwanted ghost structures or those that look deformed.

In some cases blurry images are the cause but in any case another round of images covering those areas will fix it.

Here you have two options:

a) make a full circle and align the cams before merging

b) only take several detail shots and add them to the already merged images

Both ways work but it depends hugely on the object how well you can align single images when added, if it does not work take a full round as before.

If the model alread looks good in all angles with the dense cloud view you should be able to get a successful mesh creation and model.

Taking Your Shots and Sorting Them....

Ok, enough with the dry theory, time for some action!

You should start with a quick test run:

Set your cam at the same height as your object and take a shot every 10 or 12 or 30 degrees - make sure your object stays without moving on the platform, if in doubt secure it with a drop of hot glue.

Transfer the images into the software, align the images and create a dense point cloud.

When this looks ok create your 3D object from it to check the level of detail and if some areas cause problems.

If you object seems to be ok we now start the real shoot:

The cam left untouched for testing will allow you to simply add more images as required.

This way you don't have to start from scratch.

Take images at every 10° of your object at an angle slightly under the same height of the object, simple objects can be fine with shots at every 20°, complicated ones (like the crystal I will use) can require images every 5°.

Once the set is complete take one image but without the object - this image will be used for the masking process later on.

You can rename this single image to "MASK1" or similar if it helps you to identify the msking files.

Transfer all the images of this round to the PC into a dedicated folder, for example "round1_low_level".

Now do the same at a cam hight slightly over the object height, so that you shoot from about 30° down onto the object.

Again take another image without the object for the masking.

Transfer the images to the PC and place into a new folder like "round2_above_level"

If the object is not too complicated in shape this should do and we can turn the object sideways for another round.

Since we already have most of te details we need now a single sequence should be sufficient here, but for complicated objects we might want to do the same "from under and above" method as before.

Usually it is enough here to take one sequence right at the same height with the object.

Again, don't forget to take a shot without the object to create mask.

Transfer again to PC into a folder like "round2_object_turned".

The different folder and their corresponding names will help you with the checking of the cam alignment, but more on this later on;)

Now it is time to check all images with something fast like IrfanView, just want to confirm all parts of your object are sharp and detailed in all the shots you took - don't be tempted to mess with the images at this stage!

A few pics with blurry areas are usually not a big deal, if you have the problem with a whole sequence take new images.

Some blurr in an image can be compensated by the software and if unusable or causing bad results you can discard the affected images at a later stage - it is better to have too many images than not having enough.

In case you want the best results in terms of texture now is the time to create two sets of images from your RAW pictures but I will not cover this in my Instructable today as for most uses it is simply not required.

For printing a 3D model you only need to create a proper mesh but not a texture.

Creating a 3D Model From Your Collected Shots....

Now it is time to fire up our photogrametry software, I will use Photoscan as it is my personal preference (and because I have no money to buy more software).

We start a new workflow by creating new "chunks" for each of our image sequences, for my crystal I need three.

Using different chunks allows the software to create an alignment for each each sequence, which is faster and more accurate than trying with all the images mixed up.

As our cam position is fixed the software can only use the object itself for reference, aligning each chunk individually makes it possible to combine the chunks at a later stage by means of refeferencing from the model instead the seperate cam positions.

With three chunks created a double click on the first sets it "active".

We now add the images of the first sequence but without the image of the mask.

All images will show up as "NC" "NA", meaning they are not calibrated and not aligned.

The calibration can be useful but for my purposes I will ignore it and let the software do it based on the EXIF info of the image.

Now we can import the image without the object to be used as a mask, here the software will compare the changes it detects and automatically mask everything that is different.

As the cam never moves we can use a single image for the entire sequence.

To get the settings right I start with just one image and adjust the slider until most or all of the background is masked without compromising the object itself.

In the below image the white line is the masked area.

Here it really pays off to have a light tent of a at least a "green screen" as otherwise a lot of manual labour is required to mask the object properly.

As you can see in the images I did not bother with any of the good stuff to show that the entire process is possible with a totally basic setup if on a budget.

For a good mask on a worst case background like this you will have to do some manual correction to the mask to get this result.

Using a proper greenscreen and good lighting can prevent this, but I wanted to show that even the most basic setup is usable for this porpose.

Once the right slider setting is found we do the same again but for the entire chunk of images by selecting the masking image and applying it to all images in the folder (now you know why we used different folders ;) ).

Check every single image and correct the mask as required! You don't want an image in the set with a bad mask or unmasked background areas!

On the left you can see the 3 chunks containing the images from the three rows.

Do the same for all sequences / chunks.

Did I mention photogrammetry with a single cam and a rotating platform can be time consuming? LOL

Once all masks are ready it is time to do the magic :)

The big difference between online services and our manual approach is that we can we interfer with it.

Using a rotating platform is usually avoided for 3D scanning using a cam but we can ignore this "fact".

Select the first chunk and let the software align the images, you can stick with the default values but should make sure the tickbox to use the mask (constrain to mask) is set.

If you have an option to select pair or define the type of image set (e.g. rotational, directional, airial) select the right one to speed up the process and have less problems with the cam alignment.

It will take some time, depending on the number of images, resolution and hardware in your PC.

Repeat the process for all chunks in the workflow.

You will have a chance that all goes perfectly but in reality there will be cams being "misplaced" by the software.

In the model view you can spot them as they are not in the same nice circle as the other cams.

In most cases this can be corrected by removing the alignment info of the affected cam and 2 or thre more on either side.

We simply select these cams and perform an alignment just for them.

As you can see, we now have a nice ring of cams around our object, any cam in a misplaced spot should be either corrected or deleted.

If a larger number of cams is affected it pays off to put them into a new chunk.

This way you can align both areas and when done merge them back into one chunk after aligning the chunks.

Only in severe cases you will have to remove a bad cam.

Repeat this for all chunks.

Now comes the part that is important for the quality of the end product:

Once we have a nice circle of cams for each chunk we optimise the camera locations through the software.

Doing this for each chunk will use the already generated match points to align the cams as good as possible.

Again, we do this for every chunk we have by selecting to optimise the cams.

The explanation for this is simple: in my example I have 72 pics per chunk, each with almost 24mb.

Aligning 72 images in one pane as pairs is much simpler as trying to do the same with 216 or more images scattered throughout a thre dimensional space.

Also the accuracy is much better since we have a fixed cam position.

Next step of the magic is to align the chunks together, so instead of aligning the images within a chunk we align the complete chunks against each other - this gives us the 3D informations and cam locations within the entire work area.

Last but not least step here is to merge all chunks into one.

This step can take some time depending on your hardware and the quality level you selected for the previous steps.

After that we can see all cams at once in the model view.

It looks a bit weird like this as we did not move the cam but instead changed the height of it and turned the object sidewas on the turntable.

Looking closely you might notice the object is "upside down" as the higher cam shots are below the straight one, but this does not matter at all for the process.

We have now sucessfully fooled the software into thinking we actually moved the cam around the object :)

Of course all this does not give us a 3D model yet, we have to generate a dense cloud that defines the exact location of the pixels we created earlier within our 3D model.

Be prepared to read something or to take the dog for a walk while this is happening.

Especially when creating a model in very high quality and resolution (as well as many control points) it is very time consuming, a fast graphics card or two certainly speed the process up a bit, otherwise substitue with CPU cores.

Here is a low quality rendering of the crystal, as setting you would only use for object of a simple structure, like a coffee cup.

Adding a simple texture makes it almost look good already, but we did not go through the trouble of taking so many pics for nothing...

Check the difference if a high quality mesh from a quality dense cloud is generated:

Mind you that no depth filtering was used, which would have created a muchsmoother surface but I wanted to see the full level of detail based on 8MP images.

If we add a quality texture to this we have something that looks almost like the original:

And for you to play, the 3D animation:

Crystal by Downunder35m on Sketchfab

If anyone want to try with my set of images I can upload them (the model can be downloaded through the animation by checking the object directly Sketchfab).

Use the mouse to move, rotate and zoom.

Difficult Objects....

The crystal was hard because of all the different surface in all sorts of directions.

Now I will show you the limits of phogrammetry using a flower pot from my garden.

The technique is exactly the same as before:

Taking a few rows of images covering the entire object.

Masking and aligning them.

Creating a dense cloud and mesh.

Adding a texture.

The pot is a puppy dog, almost the shape of a shoe.

Here you can it from the front with the mask already applied:

I created the mask manually as it was easier with a shape like this than to fix the background mask manually.

Here you can see the model as a mesh with all the cams around it:

As always in life, the devil is in the detail:

You see the artefacts on the inside as the software did not have enough information to resolve this area correctly.

Hollow objects like this usually need either a lot more images or some manual labour with a 3D program to remove the unwanted areas.

These error happen due to the shape and different lighting.

It is next to impossible to create the right conditions to get the errors removed at the scanning stage.

In some cases it can be helpful to use a LED ring around the lens that is illuminating the inside of the object properly.

The downside is that it would require another set of images with quite some work aligning them correctly.

Best approach would be to take images at 5 to 10 degree intervals in a RAW image format and to use a good imaging software to adjust the brightness and kontrast levels evenly throughot the set.

When finnished save the images as TIFF.

The same is true for the surface itself.

Unless you want to take a lot more images it is easier to smoothen the surface in a 3D program if required.

The pot with a simple texture looks much better though:

Pot by Downunder35m on Sketchfab

Mind you this was just to show you the limits in terms of hollow objects and not to create high quality scan.

With another set of images around the hole and proper lighting it should be possible to make the artefacts disappear without editing, resulting in an more accurate model.

Miniature Scans...

Sometimes we have an object that we would like to have a model of but normal ways of scanning it failed due to the tiny size.

To show what is possible take a look of this micro switch in front of a micro SD card:

You are right - this is tiny....

To create the scan with a rotating platform I had to use a macro lens as otherwise the switch would have been to small in the images.

With this I also noticed the big downside of macro lens adapters: They distort the more you get to the outsides of an image.

Using about the inner 50% of the image for the object worked fine, I found out after all alignment attempts failed with the first set of images.

To scan such a tiny object some preparations are required.

As you can see I used a marker on the shiny metal front as otherwise the reflections from the lights mess too much with the image quality.

It is also quite hard to align the images as with macro images you often have some blurr in the pics due to the focus.

With the main color black and a lot of tiny surfaces it was necessary to take four rows of images as you can see here:

The alignment was a nightmare and I usually was lucky to have this for a row of cams:

By using markers I was able to align the images in a chunk although I had to combine this technique with the manual re-alignment mentioned earlier.

I also noticed that merging the dense clouds for several chunks is not always the best way.

The result was a lot of unwanted "ghost" shadows as the size calibration did not work too good.

Just aligning the chunks and merging them without the dense clouds worked much better, but required the calculation of the cloud data from scratch.

At this point I would like to mention that all camera optimisation should be performed on the chunks containing only one row of images!

Doing this on merged chunks can result in most cams being misplaced all over the place.

After about 18 hours of having an unusable PC busy with calculations I got the dense point cloud data:

The basic shape is already visible but becomes cleared in the model with after generating the mesh:

Only after creating a solid mesh I was able to see that using mild depth filtering for the dense cloud generation was not the smartest move.

Although the models looks nice and smooth some details got lost.

I had a previous low quality model that showed more details, especially on the six "holes" in the back of the switch.

But after spending so much time on the model I did not calculate again without the depth filtering, might do so later if I have no use for the computer.

The finnished model looks still quite good, considering the size of the object:

Switch by Downunder35m on Sketchfab

Doing It for Free - Using Freeware...

If you are not using 3D scanning or printing as an occupation you might be reluctant to pay a few hundred bucks on software you only use once or twice a year.

But for this problem is a solution in the freeware section :)

the entire process is quite similar to using profession software but there are differences:

a) We have to use more than one program.

b) There is no support for loss free TIFF images so we need to use or convert into high quality JPG images.

c) There is no maskin either, so there is more cleaning up to do, but more on that later on.

We start with VisualSFM:

I will use the entire image set I created for the crystal scan - all 288 images.

As VisualSFM does not work with TIFF files they need to be converted into JPG images.

This was done using the batch function within the freeware IrfanView by keeping the EXIF data and using no compression (100% quality).

When you start VisualFSM you see a screen like this:

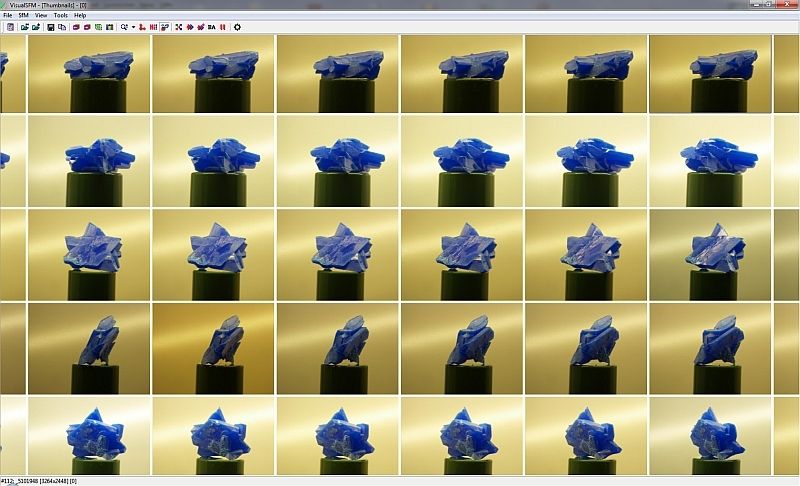

We load all 288 images for the crystal:

So we can see all images as thumbnails in the work area:

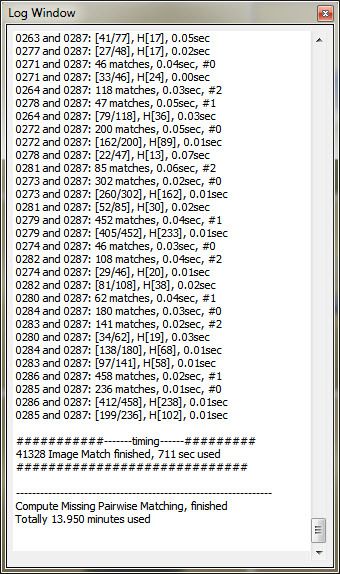

Next step is to click on the button to compute the missing matches.

As you can see it did not take too long and you can watch it happening on the screen - quite nice actually.

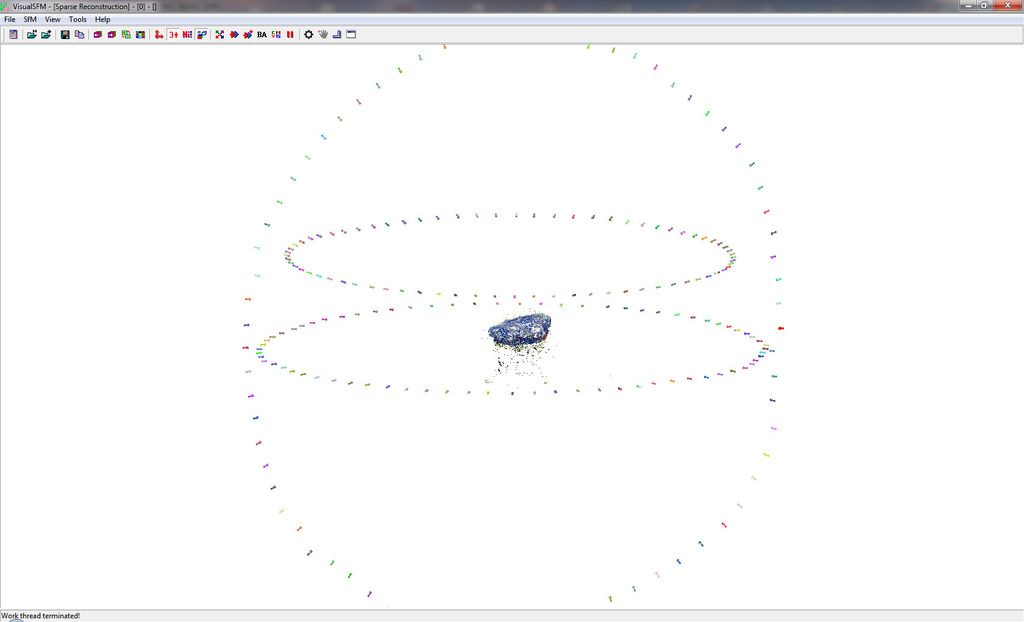

Here the program will align all cams and you can change the view to 3D to see it.

To generate a dense cloud we have to specify a filename as this step is done by an external DLL, we will use this in the next step.

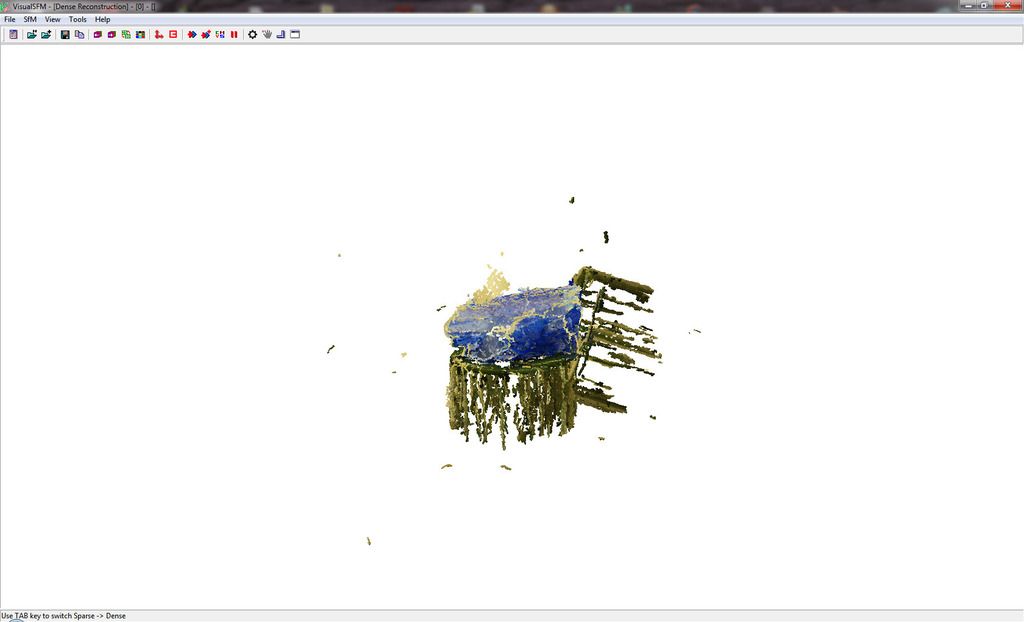

After generating the dense cloud we see a weird looking model:

There is quite a bit of unwanted stuff generated, not to mention parts of the background and cap.

But this can't really be fixed here so we do that in Meshlab.

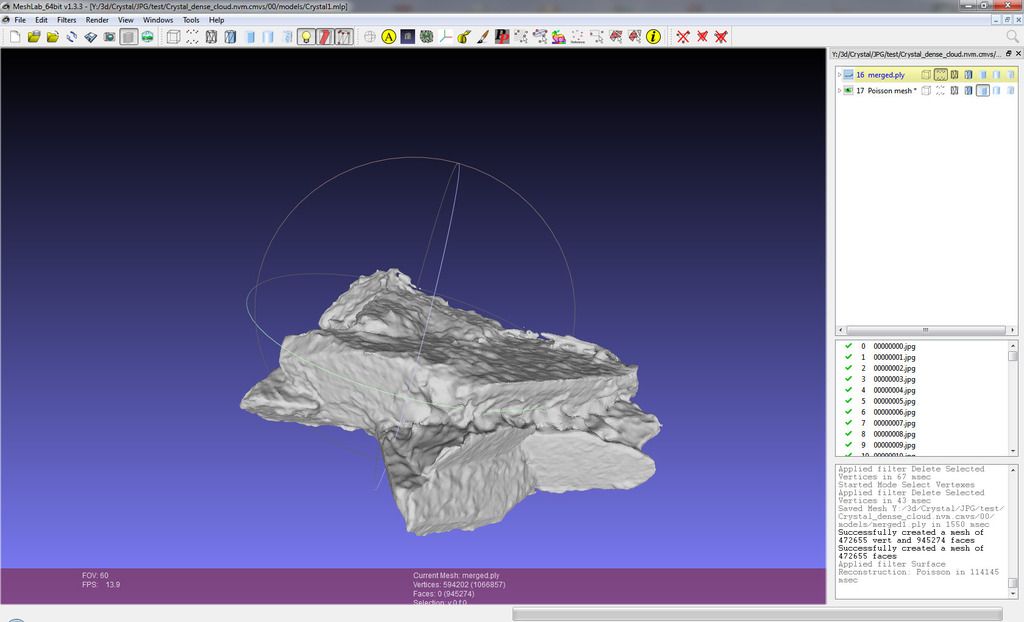

Within the folder for our image there is now wanother for the dense cloud.

I named mine Crystal_dense_cloud during the generation of the dense cloud, so the folder name is

Crystal_dense_cloud.nvm.cmvs

as generated by the plugin.

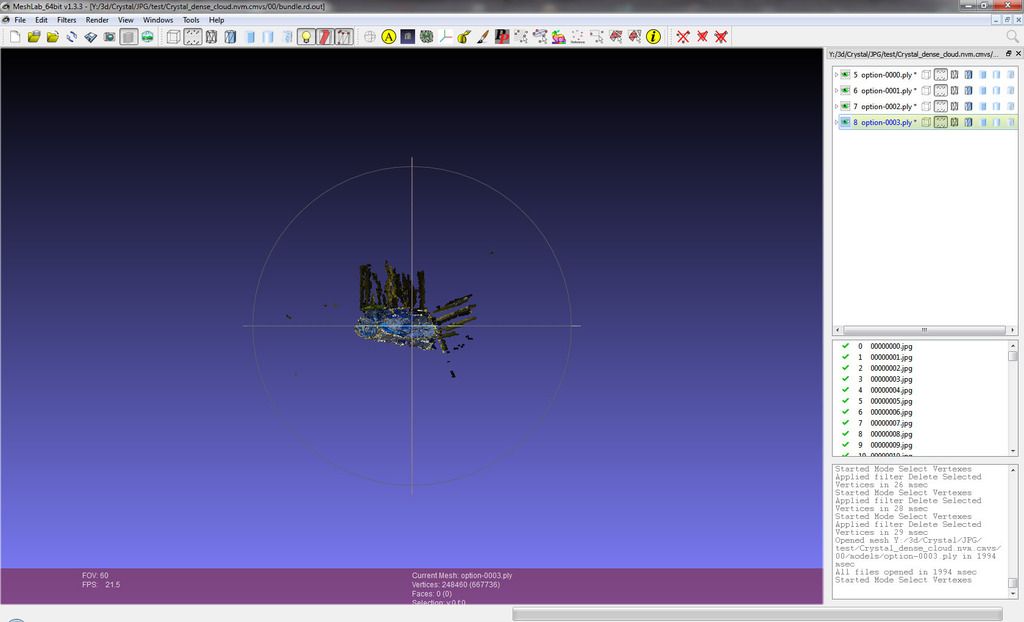

In this folder we find a lot of things but for now we only check the folder "00" as it contains the file bundle.rd.out - we load this into Meshlabs.

In the layer dialog on the right side of the window we do a right click on the existing project and select "Delete current mesh" - instead of the low quality mesh generated for the alignment of the images we want to use the meash we created in VisualSFM.

To do so we click on "File", "Import Mesh" - the one we want is located in the "models" folder.

In my case it was 4, but never mind a few more ;)

Now comes the painful work of cleaning up the mesh.

It is easier to import one mesh at a time as otherwise you have troubles switching back and forth through the meshes to find the one for the pixels you want to select.

With a normal render and a single mesh file you won't have this problem anyway.

As an alternative to doing all multiple times you can merge the parts into one using a filter:

"Filters", "Mesh", "Flatten Visible Layers".

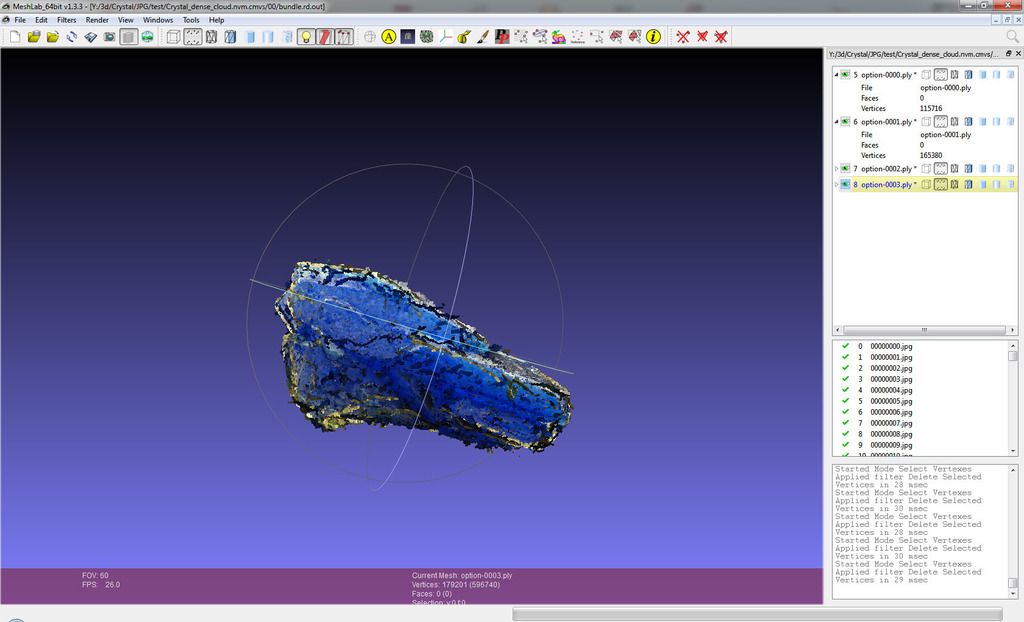

I only did a very quick job removing the worst bits without going into to much details.

For your project you should try to remove as much unwanted pixels as possible without deleting those that are your model.

It helps to have it clean as otherwise more work on the model required in the final stages when you clean it for a 3D print out.

But as I said, I did not bother too much for instructional puposes here.

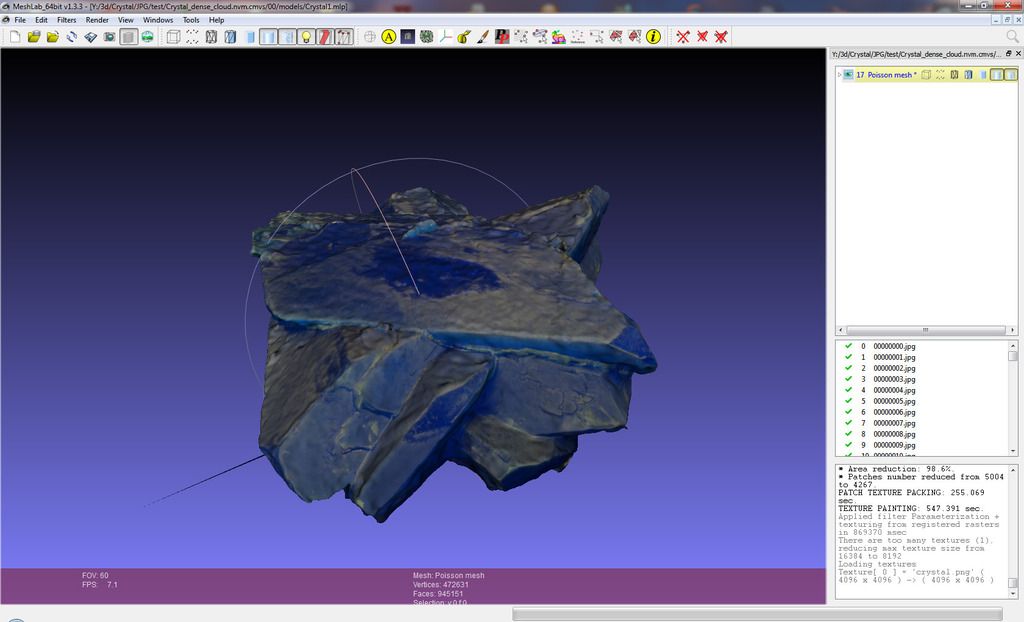

With a clean cloud we can now click on "Filters", "Point Set", "Surface Reconstruction: Poisson" - this will generate a surface for our model.

As expected with little cleaning work the resulting mesh is not extremely detailed and has some clutter still on it - proper cleaning helps as much as a proper green screen background for the images.

If i doubt you can also try to mask the object in graphics program and to delete everything but the object iself before saving it again - make sure the EXIF data is kept for this.

We can now start with a texture if you require it, or save it as an PLY, STL, OBJ or other file format for other uses.

For a texture we again apply a filter:

"Filters", Texture", "Parameterization + Texturing from registered rasters".

If you get an error about manifolds or similar you need to apply the corresponding filter to delete those problem areas - don't forget to click on the delete symbol as you did for the points.

When we click on the textured view it does not look too bad considering we paid nothing for it:

And to finalize it here the 3D view:

Crystal2 by Downunder35m on Sketchfab

Files Used in This Instructable....

In case you want to try the examples I can upload the images used.

So far I only uploaded the images for the pot s the filesize is quite a problem.

The crystal is 5GB in 216 images...

In any case I recommend to use the program Jdownloader if you are not familar with file hosting services.

This way you won't be fooled by ads, clicking wrong buttons or ending up in the Usenet.

All you have to do is copy the download link into the clipboard and Jdownloader will prompt for all required steps including download passwords or captchas if required.

Find the images for the pot here (unpacked 1.8Gb, 975mb download size) :

Download the images and OBJ file using Filefactory

You will need Winrar or a compatible packer to unpack the archive.

All sequences are in seperate folders already so you don't have to sort through them.

The masking image is the last, the one with highest numer in the filename.

I fixed the settings in Sketchfab so the models can be downloaded now.

Some Additional Tipps...

Some programs don't have a masking feature build in but can used masking images.

In this case you can use a program like Photoshop to create masks fo every single image in batch.

Refer to the program manual for the correct filenames to use, usually it is something like "mask_imagename".

Some objects can be quite tricky to align.

If most of the images align good but from a certain image on they misbehave, like looking like a spiral, being all in the same spot and so on, you can seperate the good ones from the bad in chunks.

Align the good ones again and then the bad ones in the new chunk.

Now align those two chunks against each other (if the bad ones got fixed) and you can merge them.

Delete the unmerged chunks once satisfied with the result.

Objects you scan, no matter if this way or by means of lasers, will always require some corrections before you can print them.

In most cases this included holes in the mesh, a high quality mesh that needs to be simplyfied to avoid stress on the printer, unwanted artefacts and basically anything that makes your model look "not right".

A lot of this hassle can be avoided by cleaning the dense point cloud before creating the mesh.

Often you find stray dots far outside your object.

These dots are errors and if removed make creating a correct mesh much easier.

Most printers come with a bundled or suggest 3D software to use on your creations, some are quite limited in terms of correcting errors.

Blender and other programs can help you out here to get the best possible result for your print.

Heads or complete persons....

Of course you can use the same method to create a very high quality 3D model of a person, or just a head.

The problem here is to make sure the is no movement other the turning action.

A removable head rest for the front and side shots is a good idea and a stick under the chin for the images of the back.

However, using multiple cams with a syncronised shutter on all of them makes the task quite easy.

If you are not after high end quality for your own game cheap point and shoot cams will do the trick here.

The less background you get into an image the better.

Another approach is to use a quality video cam and sofware to extract frames from the video for processing.

When doing so you should make shure to use the best video quality possible with no compression.

Automaic focus, brightness and shutter settings should be disabled on the video cam.

Tiny objects...

I am still waiting for my macro lens and some suitable insect or similar to come along.

But once the lens arrives I will add another step showing how to use this scanning method on something a laser scanner or even DLP scanner would be unable to pick up.

The trick here is to present your object on a thin pin or rod so it stand above the rotating platform, allowing you to get the cam close enough.

In some cases a tele lens at the closest possible range or a "reverse adapter ring" is an alternative to a makro lens.

The pin / rod should be non reflective and of uniform color, same color as the "green screen" in the background.

We need a lot of light for those shots, so a proper setup and some time preparing the lights pays off.

For more info on the problems with tiny objects please refer to the step with the micro switch scan.

Camera optimisation....

It really helps to have all images aligned as good as possible, the cam optimisation can help here.

A thing to remember here is that you should only use this on chunks containing a single row of images.

Doing so on merged chunks can mix the cam positions to a useless mess.

But it makes sense if you think about it as the first step is always to get the best result for a chunk before merging it.

So if the imaged are all aligned properly the merging will keep these settings and only adjust the scale if required.

I will add more in this step based on the comments and problems people encounter.....

Tips and Tricks for Those in Need....

To be honest I never had much use for the bottom part as the printing usually takes care of that bit anyway.

But of course there are options for those with a real need for a complete model.

If you are doing a lot you might want to use the extra info and your experiences so far to make an updated version?

Anyway, some basics to get on the same level:

You really want tons of pictures!

And you need as many angles of your object as you have hidden areas.

For example a coffee mug can be really simple at first glance - until you try to fix the hidden areas of the handle ;)

Having some extra pics for those areas certainly helps!

The clue is to create a full circular view with overlapping pics at fixed angles.

Each angle round can be used for a simple point cloud if processing power and memory is limited - I struggle with 32GB or Ram...

Once you see a basic model forming you might notice some areas that cause you headaches - like the handle in the above example or the inside of a pot that is not just round.

I learned that it is far easier to leave the setup as it is and to check the round of pics taken for usability first.

Problem pics are removed and replaced by new ones, if doubt more than before.

Something that can't be fully fixed from this angle gets some extra pics before the next angle round - sometimes at totally different angles to fill hidden areas.

These extra pics I keep seperate from the sequences for later use.

The next angle round should be well overlapping if the model is complex!

Simple stuff can do with 3 or sometimes just 2 different angles, really complex stuff might require 6 or more rounds of pics.

But in a lot of cases you avoid complete rounds if you get the basic shape right - the missing bits can be formed through the additional chunks you take for problem areas at different angles.

Just to be clear: If the first round was at 40° from above and the next shall be flat at 0 degrees than the chunks in between should be taken at an angle outside 0 or 40.

Again create a simple model before setting up for a new angle to actuall see where you might need more pics or where a pic was too bad to be used.

The worst is to have hundrets of pics and to find out it is still not enough because one area hase some blank spots left.

Finally and usually after many hours of hard work it is time to create a full model.

There are many ways to do this but you might need more than one depending on the hardware you have in your computer.

Stitching 50 5mb pics requires already 250mb of RAM just to keep them accessible.

During a stitching this memory requirement can tenfold with no problems at all!

The problem is that quite often it is impossible to create lets say 20 slightly formed pieces that you then try to get to a full model.

Without the full geometry this way often results in bad deformations.

For me the best way is to create the first round and to then add chunks of pics in the right order.

Requires less memory but can mean some more manual adjustments.

I tried to get around the computing problem, especially the memory part but the only really working option is to reduce the image resolution.

Since the camera is fixed you can create masks to remove the background - 30% less background in an image after resizing it makes a huge difference for a collection of 300 or more...

It makes sense to utilise what is available on your camera sensor too, so don't take pics where the object is too tiny and the background is everything ;)

Keep the light bright and even!!

Fix the camera settings!

Do not use automatic modes of any kind!

Every pic you take needs the same light, amount and brightness - avoid using the flash if you can.

Color or brightness differences make the stiching much harder than what it should be...

Actually rezising the image or not??!!

Some people create their images with their new smartphone and realise too late that all pics are in 20 megapixels resolution - even a top end gaming PC will give here.

If the image quality and the object in question allow it than rezising can be a good option - if done correctly and with planning ahead!

For this you want the images in the RAW format or if that is not possible in uncompressed JPG or better PNG.

Now: Reducing the resolution means loosing details!

If you need extremely fine details you migh have a problem...

The best way to reduce images for stitching in a 3D way is to use logic ;)

All digital images are made from square blocks.

So deviding the resolution by 3 is a really bad idea...

Cutting it in half means 4 pixels are combined into 2 - depending on the algorithm used the result can be blurry (a bit) - so test and check in a good enlargement first.

But deviding by 4 means that 4 pixel that formed a square will now form a single pixel and again a square!

This is the best compromise between size and detail as the algorithm does not need to create "odd" pixels.

For really high res pics deviding by 8 might work too.

Please keep the saved image still uncompressed!

Extra hint: If you do plan to rezise your images then do the background cutting first ;)

Cut the images to get X and Y values that can be devided by 4!

For example cutting a 8megapixel image to 5200 x 2800 pixels is a great idea.

But cutting it to 5185 x 2800 would mean you will struggle later on!

After this your images should be significantly smaller :)

Colors anyone?

Getting down from about 20mb to about 12mb is a good first step (you results will differ depending on image resolution!)

So far our images are still uncompressed and in full colors, a bit much if you only need a model and create the texture from the high res pics later.....

Uncompressed is good as we don't have to worry about some compression creating unwated blocks of pixels.

But we certainly won't need 16million colors to stick some images together.

The key is to reduce the number of colors wisely!

Most simple photo or image editors are too limited for the use on really colorful and complex images - more tricks for this a bit further down "dusting"...

The best way to reduce colors by using a fixed color selection.

Some of the well paid programs allow to search through all images inside a folder to find the most commonly used colors throughout.

This gives the best results and is well worth the extra processing time.

On the slightly lower end it is still possible that you try creating your own selection.

Example: Reduce one image to 256 colors and save the resulting colors.

Apply the same color reduction with the saved selection on other images and zoom in to check for areas of badly messed up colors - trust me you will know what I mean here if you spot it!

In a lot of cases this works out reasonably well, compared to the pro solution above.

I tried to do this step right on the camera level but turned out I can not set a fixed color range - so it was useless.

You might want to try going down to custom selection of 8 colors if the object allows for it - but double check first that neither the object nor anything you need in markers for the alignment shows a blocky look!

Chunks of blocks in slightly messed up colors will most certainly appear differently in the next image of the sequence - this can make good stitching impossible so use less than 256 colors with caution!

Too many images required, not enough memory in my computer - HELP!!!

Stop yelling if you can't afford a 2GB RAM workstation with at least 64 CPU's! ;)

Been there done that and as said, realised that even 32GB of RAM can be a struggle for more than 250 images of good quality.

A possible way out can be DUSTING.

Be it something really shiny or reflective, something with neon colors or just not really "compatible" with your cam and lights - you need to fix it if you want to get results.

To be clear: We talk about creating images for a 3D model, not for the texture - these can be taken seperately if required.

Ever tried to scan a polished stainless steel object?

A nightmare for the unprepared!

Same for high shine, like on some ceramics or painted objects.

You can tweak the lights, use camera angles to support the cause but you can not eleiminate reflections and the problems caused by them.

The angle changes for the next image and suddenly something that was so bright that was almost white is now almost black - your stiching will fail here unless you have enough extra pics of this area to compensate.

Dusting is a trick I use to compensate for all this stuff that no one really needs anyway.

With a black background the cheapest option is baking flour or corn starch.

Use a fine sieve to get a nice and fluffy powder then like on a cake use the sieve to sprinkle the white powder all over it.

A slight blow or nearby fan removes excess and you can see where you need to add more dust.

On some surfaces this won't work without additional preparations though.

If water does not run off the surface but instead make it evenly wet you can get the flour to stick better but avoid drops of water!!

A cloth to wipe some oil over the surface is the next best step - be careful on plasitcs though and try it out first.

For really hardcore objects a masking spray as used for airbrush can help.

These can either be peeled or rubbed off or washed off with water.

If white is too much for your cam then just add some dry food coloring or othe fine coloring pigments to the flour.

Putting all in a breakfast bag and sealing it is the best option - just shke the heck out of it for a few minutes.

Handling can be tricky - if in doubt make sue you can just push the object from your dusting area onto the rotating platform or some glass plate to transport to the platform.

In some cases I just put the object on the platform and dust it there.

When done I use a controlled airflow and a fine nozzle to clean the object and platform.

Special case: how to get a really complete and closed model?

All good 3D editors allow for object repairs.

Since your finnished object was always flat on the platform you can just create a pane to close the hole left by the platform.

For the cases where the bottom of the object isn't actually flat:

Just do the same as before: Take a sequence of pictures.

This time however you place the object so that you get a good look of the underside.

Complex things, like something hollow might even require a lot of sequences if you want to care for things like wall thickness of a complex ceramic plate or so.

You then create a 3D model from it as before.

Finally you need to combine the two - it is important to keep the distance from the object and to use the same cropping and editing things you did before ;)

Depending on the amount and size of your entire image collection you might have to tweak around here and there.

If you only have a 8GB laptop of older age then you simply don't want to stich 500 high res pics....

You can use different softwares or might even have access to some really nice apps and servers...

If not then try out what works best for your sorftware and computing hardware.

Sometimes a model comes out really good if you use just the point clouds to combine the sequences.

And if you can reduce the amount of points required then even an old laptop might do the trick.

Doing the stiching point based however requires a lot of work.

You need to clean up the clouds, often manually align them to known reference points....

And only once you at least render a preview you actuall see if it could look like the object you try to copy.

The hard work comes once that is done, of if you skip the maual labour and do it automatically.

Every image needs tobe loaded, aligned, transformed and referenced.

If the base is good it can save you a lot of hours!

Make sure you have a secure power supply - if in doubt use an UPS with a decent battery backup so you have enough time to stop, save the progress and shut down before it runs out of juice!

Nothing is worse than waiting over night, going to work and finding the thing off because you had a short interruption in the electrical grid...

Confusius the wise camel says:

Plan ahead!

Get sorted!

Re-think the approach and consider other options before it is too late!

Once you start be committed...

Jokes aside:

If you already did some simple copies with your cam and rotating table then you have a good estimation of what you need in pics and angles.

As nothing is worse than starting over you should consider to take a whole lot more pics than what your worst imaginations suggest!

Take a pic every 5° but name them properly or reset the numbering on your cam for a new sequence.

Start your stitching with just every third or forth picture.

If it comes out good you saved a lot of time.

In areas that are not so good: add some pics from the angles between those you already have.

Same for the angles of the sequences.

If you think it might be beneficial to have one more engle then just take the additional pics.

Use only what you need to get a model good enough for your needs.

Adding a lot of pics in already good areas will only blow your memory and computing time!

Accept losses you can easily fix in the finnished 3D model!!

Indentations are a prime example of blowing the budget.

Sometimes there is a cylindrical "holder" or similar going into an object.

If you don't need it: don't worry about it!

If you do need it then consider "adding" in the right place once the model is finnished through editing tools ;)

Why waste the entire night if you fix it with 10 minutes of editing when the model is done? ;)

Persective matters!

If in doubt check your model on the platfrom through a cardboard tube.

Check what angles suit best to get all vital details.

You might be surprised to see that you could do with far less...

Whatever you think is enough: do not disturb the setup!

If you do need additional pics after all then you do want to eliminate variables ;)