Hack Skellington - AI-powered Halloween Skeleton

by mcvella in Circuits > Robots

7214 Views, 64 Favorites, 0 Comments

Hack Skellington - AI-powered Halloween Skeleton

Tired of halloween skeletons that repeat the same 3 phrases over and over again? Using a few inexpensive hardware components plus modern technology, you can bring your skeleton to life in a truly customizable way. It might be the star of your neighborhood, or the most feared!

My family loves Halloween. Every year, we add to our collection of Halloween decorations that we use both inside and out of our house. In recent years, we've hosted an outdoor Halloween costume party for the neighborhood and friends - including a chili contest and movie.

This year, I bought a posable life-size skeleton, the first of this kind that we've owned. Maybe it was the fact that we for many years had a non-posable sound-activated talking figure who we are excited to bring out of storage but soon tire of the same 3 phrases ("Ha! I hope I didn't SCARE you")... It probably had something to do the fact that I work at a robotics software company called Viam... Regardless, I decided I had to automate this skeleton.

Spending less than $150 (half the cost being the skeleton), what could I do to add interesting and unique capabilities? Adding head and arm motion with servos was a must. Being able to program when this motion occurred by reacting to the environment was critical.

Recently, I've created Viam modules for facial recognition and speech that can be used to add machine machine learning and AI capabilities to any robot. With this software, a webcam, and a speaker - I could have Hack Skellington intelligently interact with his surroundings. Recognize a face and welcome them to the party. Move his head to look them in the eye when they look over. Lift his arm up when they come close, giving them a good scare. Use a creepy AI-generated voice to say various things generated by ChatGPT.

I'll show you how you can do this and more (customize the project to your desires) with less than a couple hundred lines of code!

Supplies

1x Crazy Bonez Pos-N-Stay Skeleton - or other posable Halloween skeleton - I liked this one because the plastic is relatively thick and sturdy

3× MG995 Servo - or similar servos, I bought in a pack of 4

1× Portable 10000mAh or larger 5V power bank - I used one from Enegon, most brands will do - you'll want dual isolated USB ports. You may want to find one that has 3A output.

1× Arducam Day & Night Vision USB Webcam - most USB webcams will work, I chose this because it has infrared night vision

1× Mini USB speaker - I used one from HONKYOB that worked well

1× Radxa Zero Single Board computer - or other single board computer with Wifi, GPIO, I2C, USB (like Raspberry Pi, OrangePi, RockPi, etc) - I used the Radxa Zero with 2GB RAM and Wifi, you will need at least 2GB RAM, GPIO pins, and wireless connectivity

1× Various jumper wires - for connecting components

1× USB-C to USB hub - for connecting USB camera, speaker and optional mic to the Radxa Zero

1× USB Mic - optional, if you want to be able to have your skeleton respond to speech - I used a small one from KMAG

1× PCA9685 Servo Motor Driver - for driving the servos

1× Machine screw assortment kit - for attaching the servos to the skeleton

1× USB 2 Pin to bare wire adapter - for powering the PCA9685

Unscrew the Front Part of the Skull

What a fun-sounding title to start this project!

Removing the front part of the skull

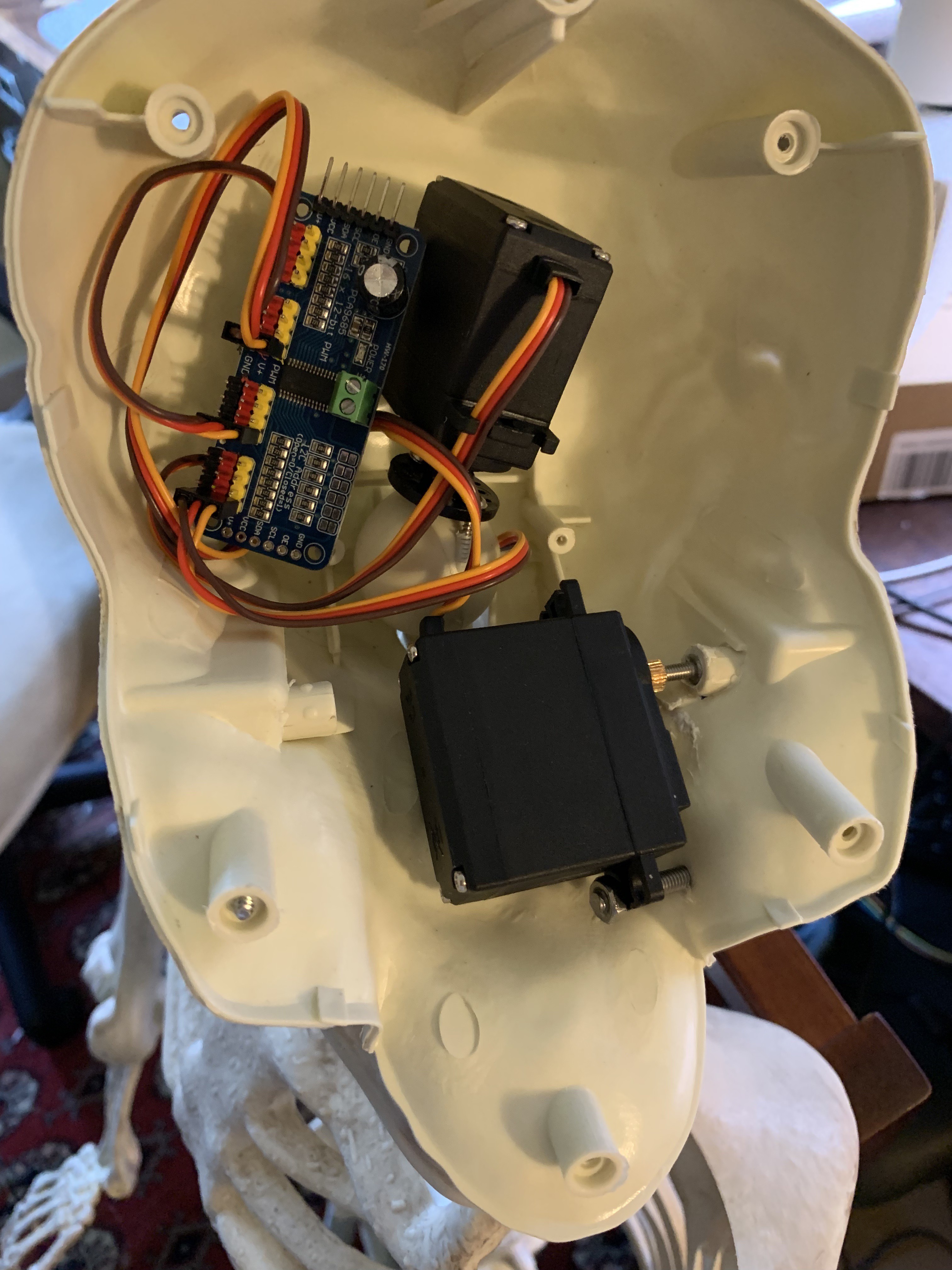

You'll need a small philips-head screwdriver. Remove the 5 screws holding the front portion of the skull to the back portion of the skull. Your SBC (single board computer), servo driver (PCA9685), and servo to move the head and jaw will be housed here.

Place it to the side, and keep the screws in a safe place.

Attach the Head Servo

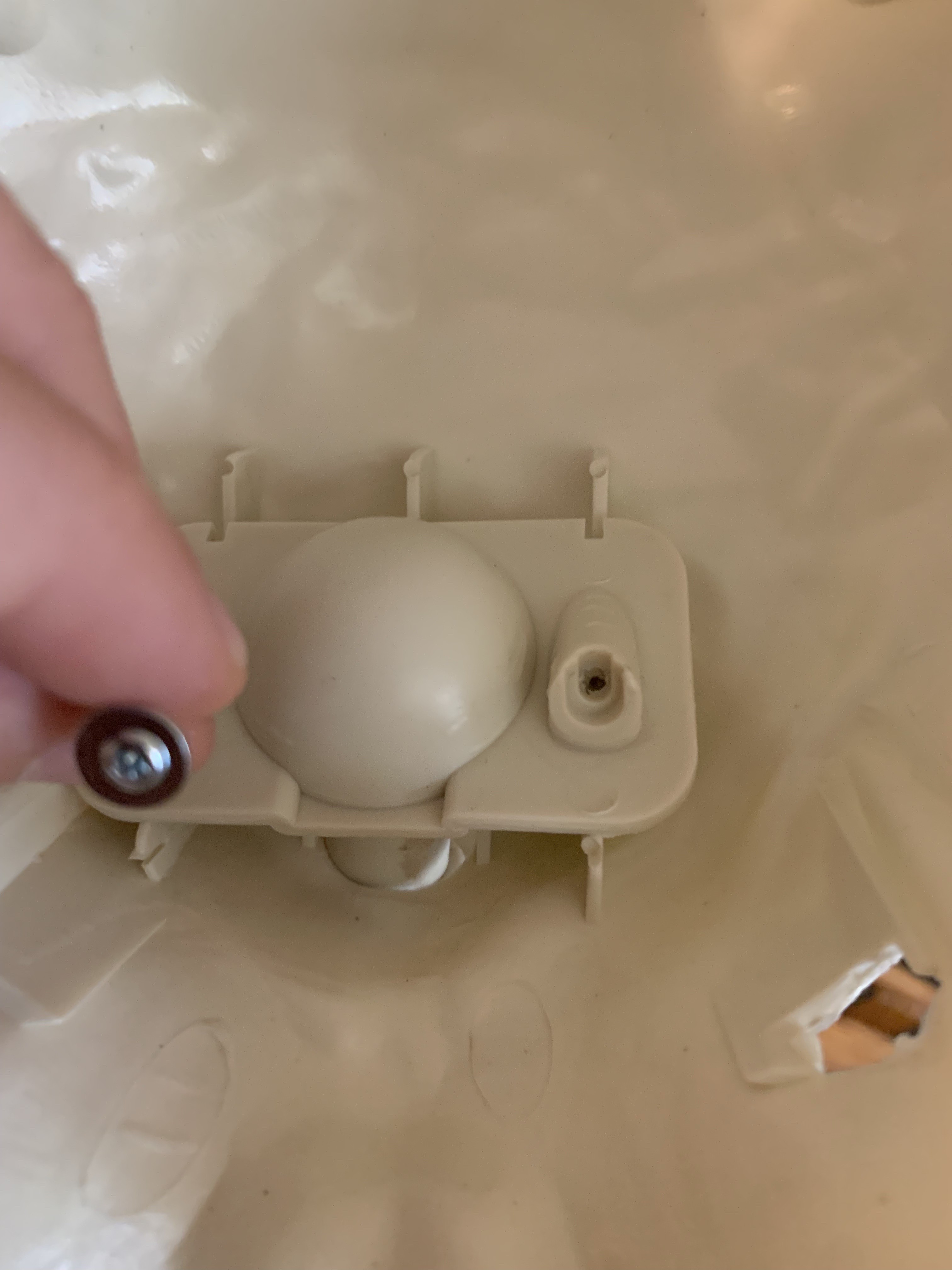

Remove the cap over the neck ball joint

Use the same small screwdriver to remove the two small screws holding the plate above the ball joint of the neck. We'll need access to this ball joint to attach a servo horn and servo in order to allow the head to programmatically rotate side-to-side.

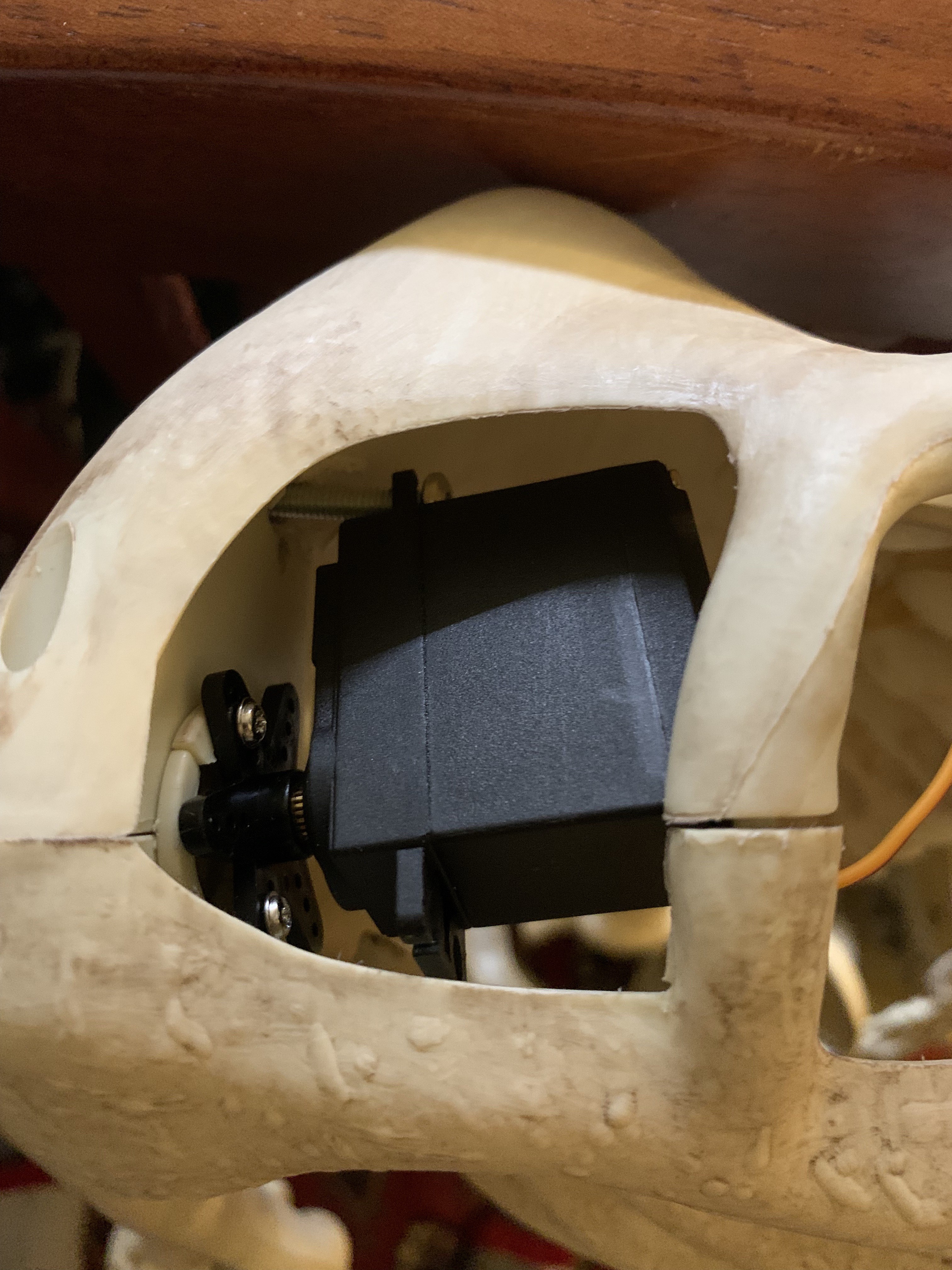

I used the round servo horn that came with the servo. In order to figure out where to place the horn, first place the servo horn on the servo spline. Then, "dry fit" the servo - meaning hold the servo horn on the ball joint and position the servo in the center of the back portion of the skull, noting where two machine screws could be used to mount the servo. Also be sure to pay attention the the angle of the head - you don't want the skull to end up looking too far down or too far up.

Positioning the head servo

Once you have the servo lined up, use a pencil to mark where you want to drill holes for the machine screws to mount the servo.

Go ahead and drill one hole, then attach one side of the servo with a machine screw, washer, and nut on the back of the skull. Again, line up the servo and neck, drill the second hole, and attach the second machine screw.

Carefully holding the servo horn in place with the neck joint, detach it from the servo. Now, take a screwdriver and screw two of the screws that came with the servo horn through the servo horn and into the neck joint.

Servo attached to the back of the skull

Fit the servo spline onto the horn and ensure the servo can allow the skill to rotate left and right.

Wire and Test the Head Servo

At this point, you'll want to test the head servo motion:

- First, to make sure it works

- Secondly, its fun to see half of a skeleton head move back and forth

We'll use a small single board computer (SBC) as the "brain" of the operation, as well as a PCA9685 breakout board, which does a great job controlling multiple servos. We used a Radxa Zero board with 2GB RAM and wireless connectivity because we liked the small form factor and relatively low energy usage. You can use any single board computer that runs 64 bit Linux (like a Raspberry Pi), but you'll definitely want one with built-in wireless, and at least 2GB RAM is a good idea since you'll be running machine learning on-board.

We are going to use the Viam platform to configure all component drivers and services, which will give us a streamlined configuration experience, simple way to test components, and an API we will later use to program and control our machine. Follow these instructions to install Armbian OS to your SBC, and then install viam-server on the board.

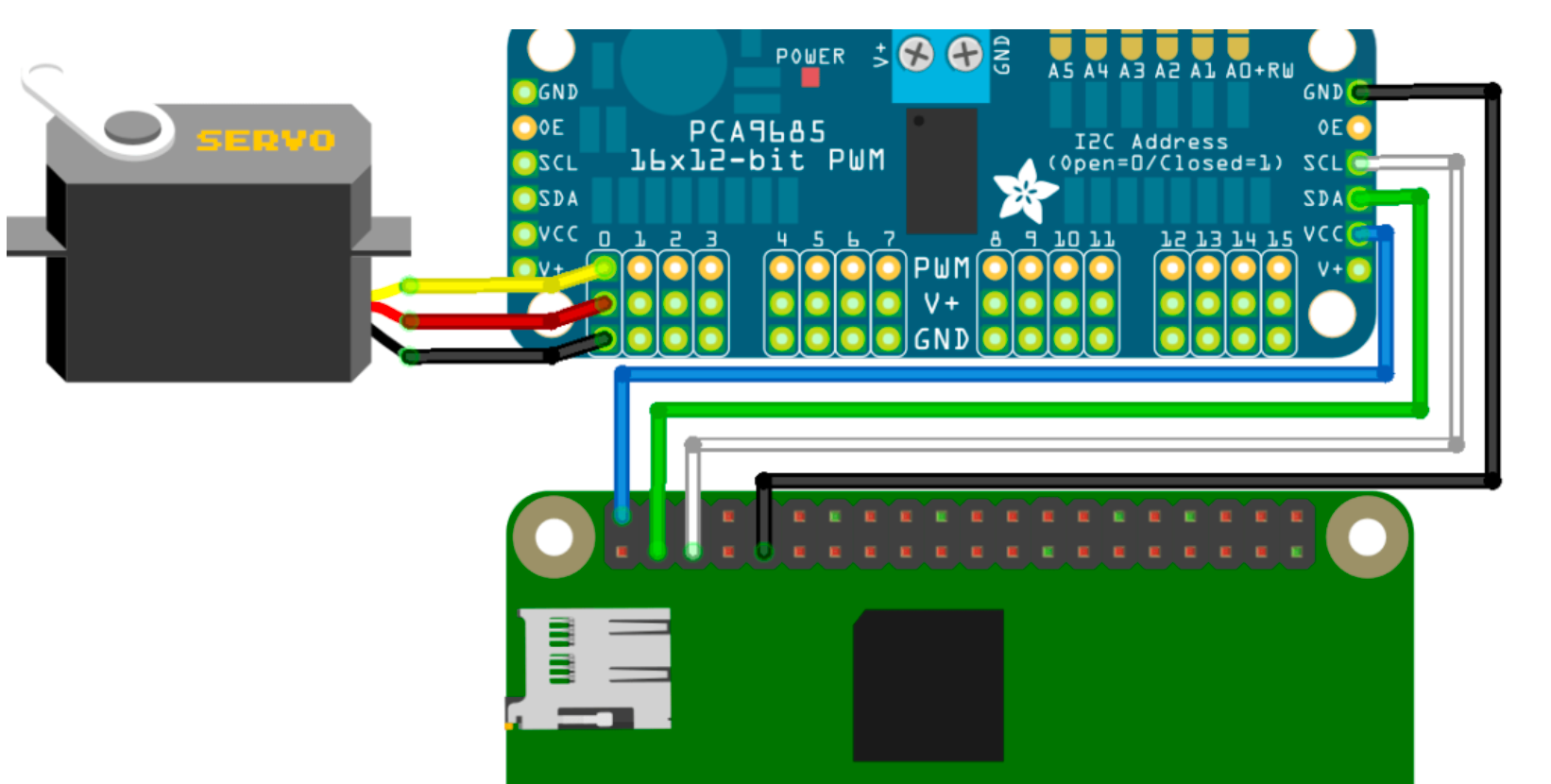

Now, power down your board and let's wire up the PCA9685 and head servo.

The PCA9685 has 16 channels that can be used for controlling 16 servos. We'll wire our head servo to the first channel, which is actually channel zero (0). Most servos have 3 wire leads attached to them, with the black wire for ground (GND), the red wire for positive (V+) and the yellow (or orange) wire for PWM.

Next, wire the PCA9685 to the SBC with four (4) jumper wires as pictured. Note that many SBC including the Radxa Zero we are using follow the similar pin patterns, but if you are using a different board please verify you are connecting to proper positive, ground and I2C (SDA, SDL) pins.

Finally, we'll want to power the PCA9685 with its own 5V power supply, otherwise we could pull too much power from the SBC and things could randomly stop working due to lack of power. We'll use the USB to two bare wire adaptor, and attach the red wire to the V+ at the top of the PCA board, the black wire to GND at the top of the PCA board. We can plug this into one side of the 5V battery pack.

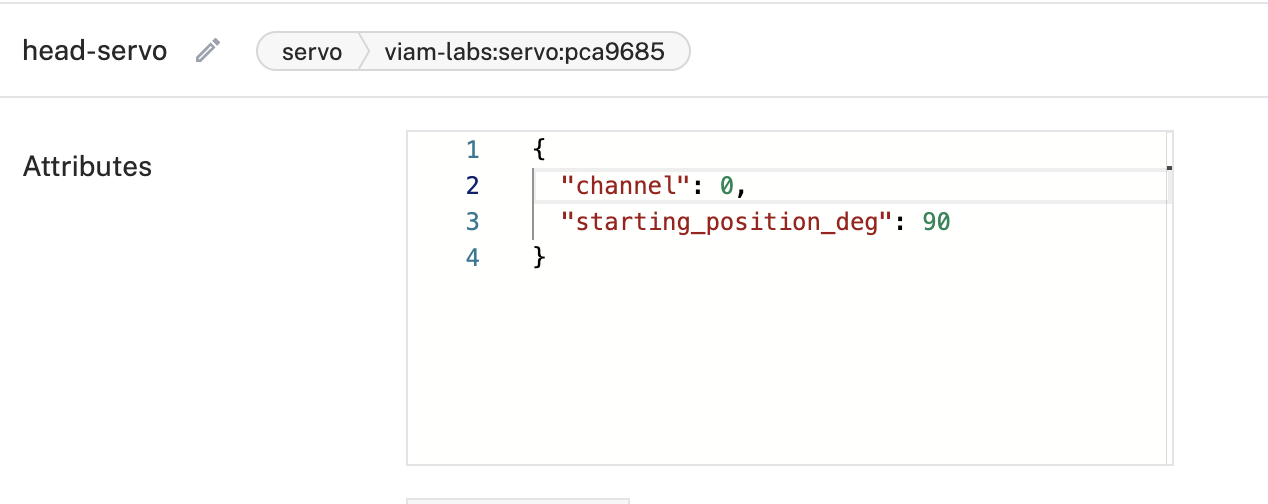

Now, we can power on the SBC again, and go to the Viam app to configure and test the servo.

From your machine's Config tab, click "Create Component" at the bottom of the screen, choose "servo", then choose model "pca9685". Now name it "head-servo" and configure the attributes as shown. We'll choose the servo starting position of 90 degrees so the head can start centered and from there move left or right freely. Be sure to "Save config" when done.

We can now test that the servo is connected and configured properly. Go to the "Control" tab. You should see a card for the servo, and from there you can try moving the skeleton's skull from side to side. If 90 degrees is not in the center, detach the servo from the horn, re-center, and re-attach. If things are not working, look in the "Logs" tab for any errors. If you get stuck, you can always ask for help in the Viam Discord community.

The Jaw Servo

What's an animatronic halloween skeleton without jaw movement? Not a very good one. I had a bit of trial and error figuring out how to get a servo set up to control the hinged jaw of the skeleton. In the end, some good old fashioned super glue did the trick.

First, find a machine screw that threads into the servo spline. Now, find a drill bit that is slightly larger than the head of that screw and carefully drill into the hinge of one side of the jaw. Make sure the screw head can be pushed all the way in, drill larger if needed. A snug fit is preferred over a loose fit.

Now, screw the machine screw tightly into the servo and "dry fit" the servo into a position where the servo can be attached to the skull in a similar way to how we attached the head servo to the outside of the skull. Note that you'll want to ensure that there is room for the servo to move to open the jaw, ideally that the zero servo position is a fully closed jaw. You should be able to move the servo position slowly with the screwdriver once the screw is tight.

Mark where the screw hole(s) should be to hold the servo in place, drill through, and attach the screws and nuts.

Now, put some super glue into the jaw hinge, push the servo screw into it, and let dry for at least half a day.

Once its dry, wire it to the PCA9685 at channel 7 (or another channel if you prefer), repeat the configuration steps that we did for the head servo in the Viam app, but this time name it "jaw-servo", configure it to use channel 7 and a starting position of 0 (which is the default, so we could alternately choose to leave starting_position_deg out of the config).

Test it out in the Control tab! You won't be able to likely go much further than 30 degrees to open the jaw to its full extent.

The Arm Servo

Here, we are following the same playbook as the previous servos, but this time we'll attach a servo horn to the inner arm socket joint.

Since the PCA9685 board will be kept in the skull, route the servo wire behind the skeleton and into the hole behind the spine in the back of the skull.

Repeat the wiring, configuration and testing process. We named it "right-arm-servo" and used channel 15.

If you are happy with each of the servos, you may now want to "lock" the screws holding the servos in place by adding a bit of super glue where each of the screws meet the nuts, else they will loosen over time (unless you used lock-nuts).

Adding the Camera

We'll want the camera to "blend in" and take a natural position for taking images. We'll use these images with a face detection machine learning model, so that Hack Skellington can react when someone is near!

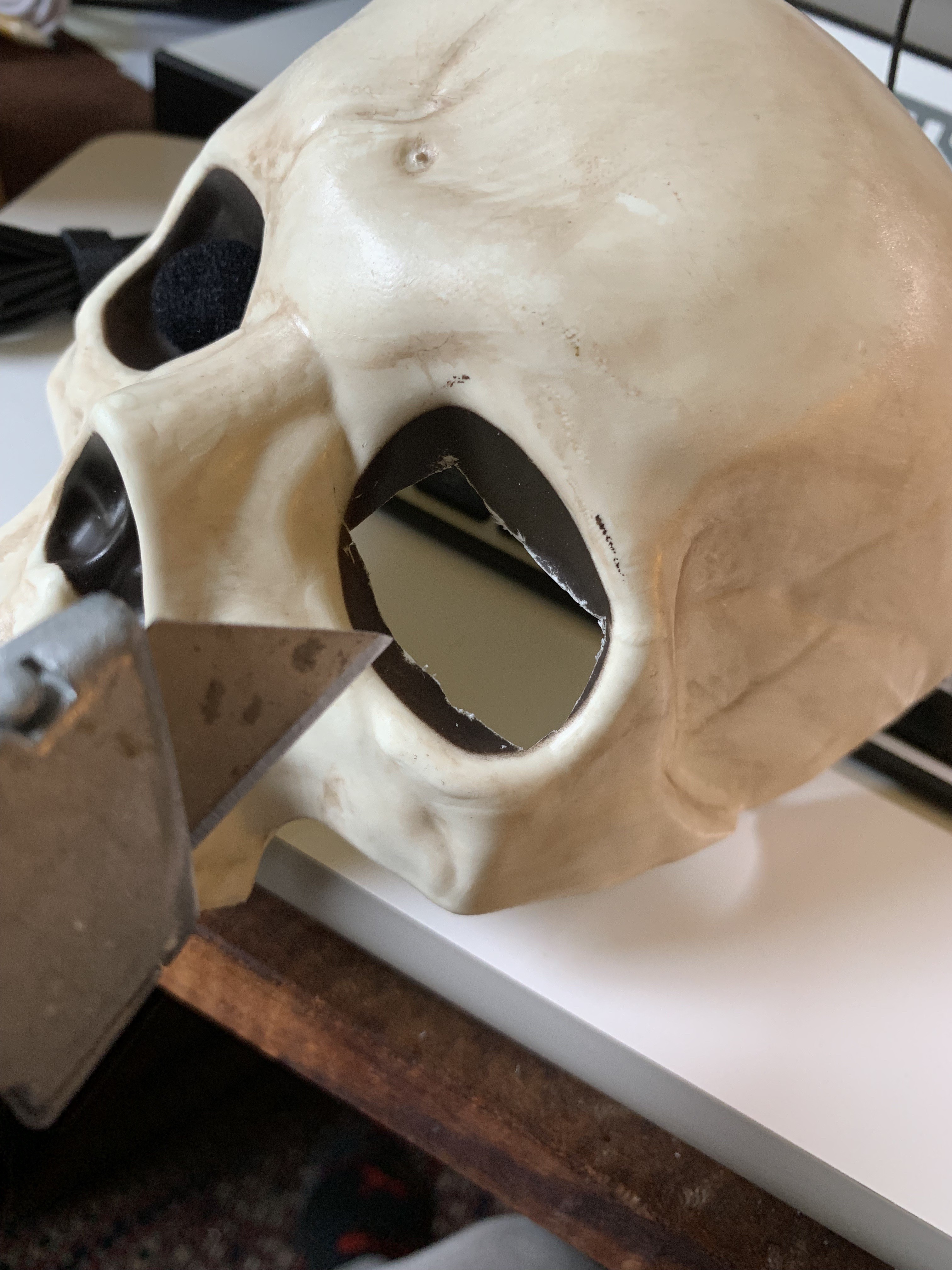

Measure the size of the webcam, and using a razor blade knife, carefully cut a hole into one of the skull eye sockets so the camera can snugly fit into the hole from inside the front of the skull.

Once you have this set up, plug the USB camera into the SBC, and go to the Config tab of the Viam app. Click "Create Component" at the bottom of the screen, choose "camera", then choose model "webcam".

If your SBC is on, you should see an option in the "video_path" attribute for you to choose connected webcam. Click "Save config" and go the the Control tab. You should now be able to test the camera stream from the camera you attached!

Tidying Up a Bit

Now that we have all of the hardware configured and tested, let's tidy up the setup a bit.

I used velcro tape and velcro ties to attach the battery pack to the back of the skeleton, so it is easy to plug in the SBC and PCA9685 as well as to remove the battery pack for charging.

I also used some electrical tape in the back portion of the skull to tape down the SBC and some of the wires. Then I carefully put the front of the skull back in place and screwed it back together.

Add Machine Learning to Recognize Faces

One way we are going to make this skeleton advanced is to add machine learning capabilities so that it can see when a person is near, using a facial detection machine learning model.

The Viam platform makes installing capabilities such as this simple with the Viam modular registry.

In your Config tab, go to "Services", then click on "Create Service". Choose type "Vision", then model "detector:facial-detector". You'll see a link to documentation for this module - you can later try changing some of the parameters for different behavior. For now, name the service "face-detector", leave the attributes empty, and hit "Save config".

The module may take a few minutes to install. In the meantime, we can set up a "transform camera". A transform camera is a Viam virtual camera model that allows you to take images from a physical camera and and transform them with the output from machine learning detectors, classifiers, and more. This makes it easy to test if a machine learning model is working as expected.

Go to the "Components" tab, click "Create component", choose type "camera" and model "transform". Name it "face-cam", and add the following attributes:

{

"pipeline": [

{

"type": "detections",

"attributes": {

"confidence_threshold": 0.5,

"detector_name": "face-detector"

}

}

],

"source": "cam"

}

This configuration is simply saying that we are using the output of our physical camera that we named "cam", we are applying detections from our vision service detector we called "face-detector", and we want to show detections when the confidence of a detection meets or exceeds .5 (50% confidence).

We can now go to the Control tab, and test that our face detections are working. If so, you should see a red bounding box drawn around your face when you look towards the camera!

Adding the Speaker and Speech Capabilities

We'll want Hack Skellington to make comments when he sees his next "victim".

Part one is easy, simply plug-in the USB speaker to the USB hub.

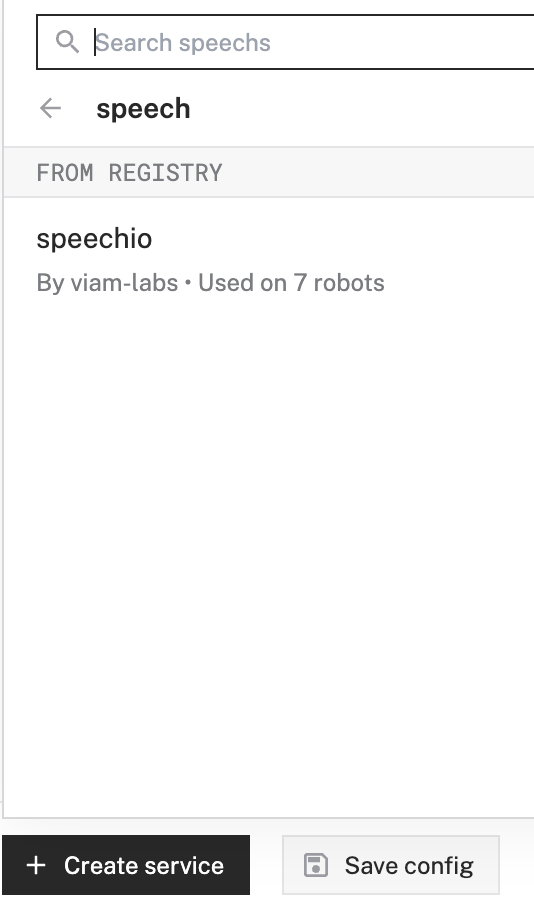

We are going to use a modular resource called speech to convert text-to-speech and generate completions from ChatGPT. There are some prerequisites to install, so follow the instructions, running the commands on your SBC to install various libraries that will enable sound and speech.

Now, you'll go ahead and install the speech as a modular resource, similar to what we did with the facial-detection module. In your Config tab, go to "Services", then click on "Create Service". Choose type "speech", then model "speechio".

For best results, you'll want to use ChatGPT to generate "completions", which are essentially AI-powered varied responses to prompts that our script will give. You'll also want to use ElevenLabs to generate the AI voice. These both require subscriptions. You can use Google speech and forego the AI completions if you don't want to subscribe to these services, but in this tutorial we are going to use them.

Configure the attributes for the speech module as shown, adding your own account details. You can try using a a different "persona" if you like, it can be anything as ChatGPT will use it to guide its completions.

{

"disable_mic": true,

"speech_provider": "elevenlabs",

"completion_provider_key": "sk-key",

"speech_provider_key": "keyabc123",

"completion_provider_org": "org-abc123",

"cache_ahead_completions": true,

"completion_persona": "a scary halloween skeleton"

}

You can also choose a different ElevenLabs voice or even train your own. To change the voice, add this attribute. I trained a voice based on Vincent Price and called it "vincent".

"speech_voice": "vincent"

Bring Hack Skellington to Life!

I created code that does the following:

- Starts one loop that looks for people's faces

- If a face is detected, rotate the head towards the face

- If a face is within a certain distance (estimated by the width in the image frame), raise the arm, otherwise lower it

- If X (default 10) or more seconds have passed since last seeing a face, get a completion based on a random phrase like "glad to see you here, hope you never leave!"

- Start another loop that is polling to see if Hack is currently speaking, if so move the jaw up and down randomly

To run this code:

- Clone the github repository. You can run this code from your laptop, but likely you want to run it on the SBC itself.

- Install requirements

pip3 install -r requirements.txt

- Create a run.sh file that looks like this:

#!/bin/bash

cd `dirname $0`

export ROBOT_API_KEY=abc123

export ROBOT_API_KEY_ID=keyxyz

export ROBOT_ADDRESS=skelly-main.abc.viam.cloud

python3 skelly.py

- Note that your ROBOT_SECRET and ROBOT_ADDRESS can be retrieved from the Code Sample tab in the Viam app.

- Now run the code like:

./run.sh

Hack will now entertain or frighten all your guests and intruders!

What Next?

There are lots of additional ways you could extend Hack Skellington. Here are some of the things I want to try:

- Connecting a microphone and having Hack randomly insert himself into the conversation with AI generated responses (the speech module can enable this)

- When a specific person is detected, freak them out by using their name when speaking (the facial-detection module can be configured to do this)

- Connect to a solar charging battery so Hack can be awake 24/7

Let me know if you build your own Hack and where you take the project!